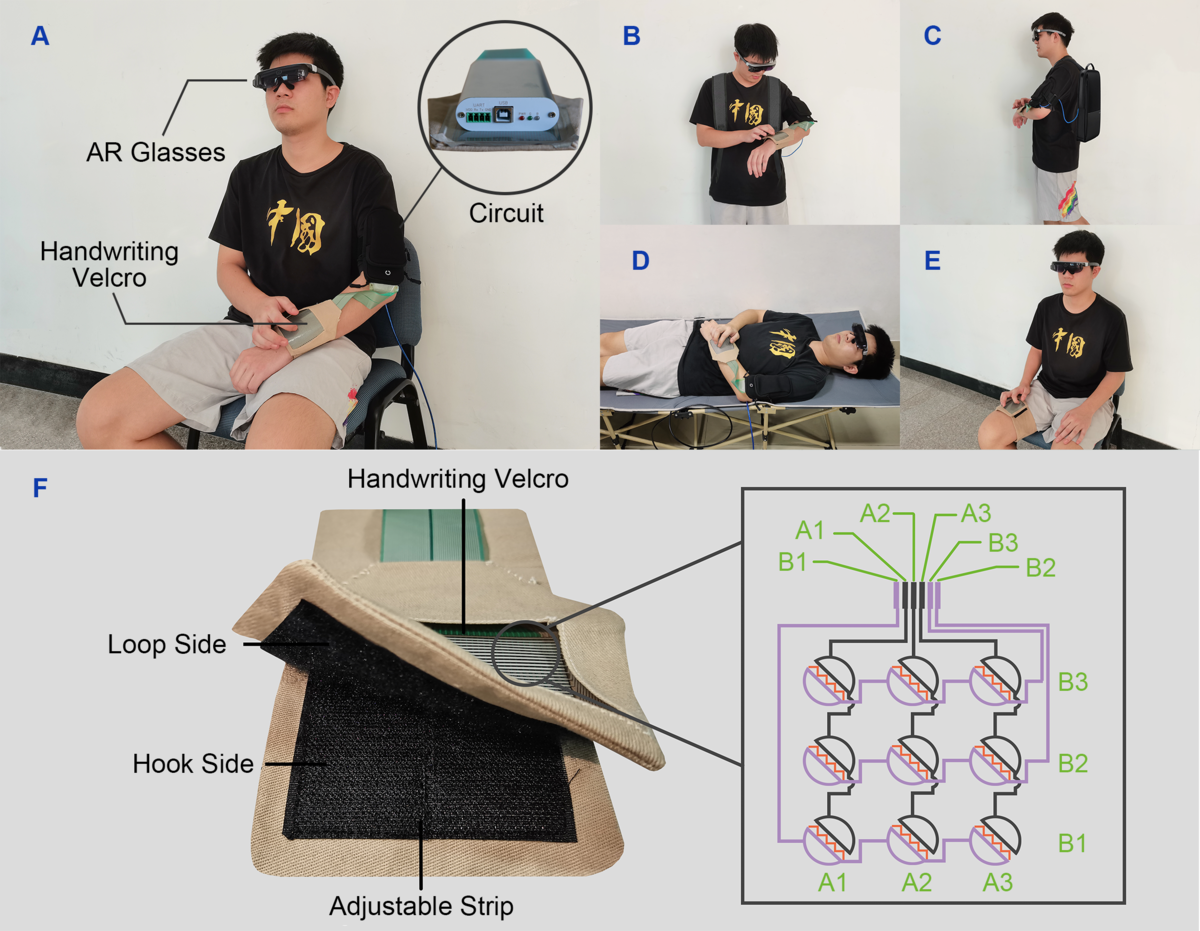

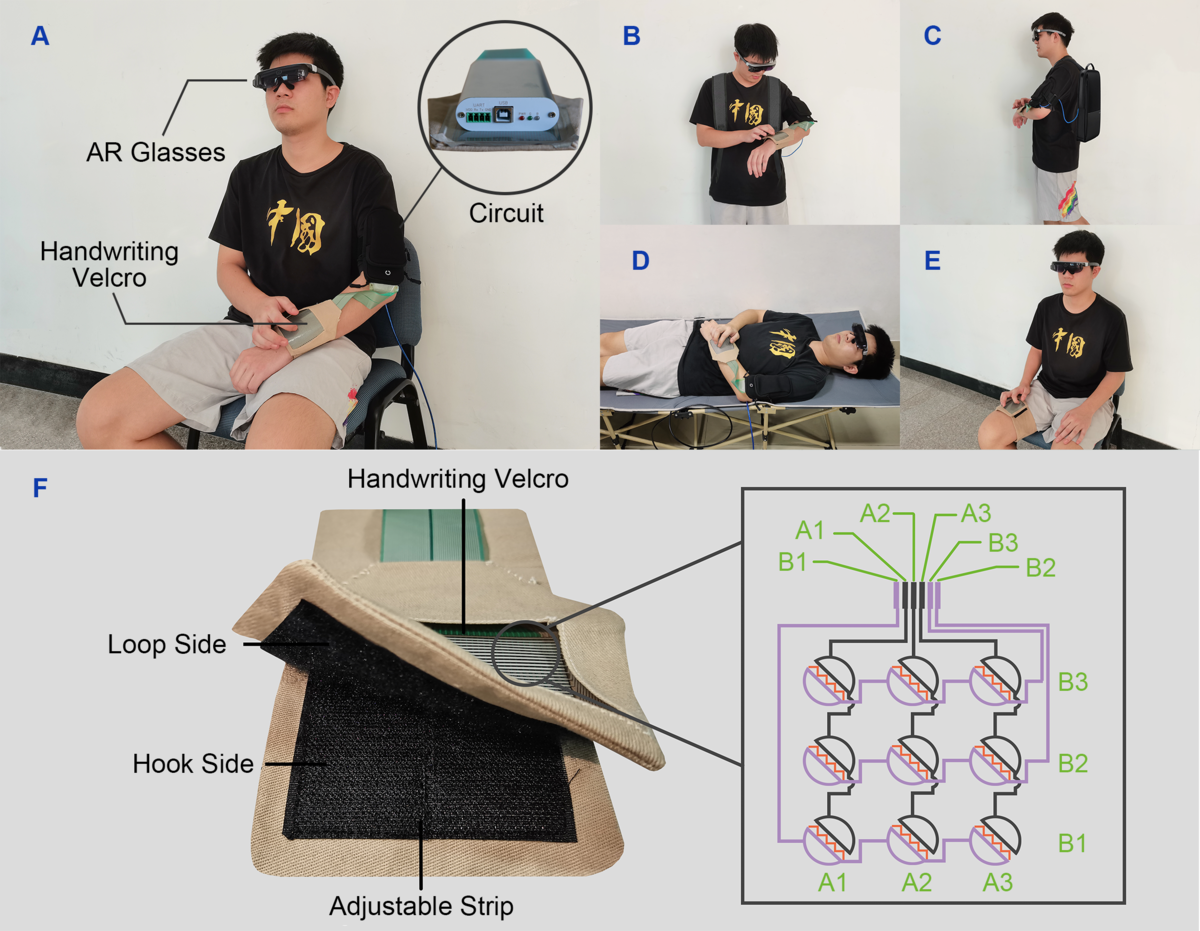

Handwriting Velcro: Endowing AR Glasses with Personalized and Posture-adaptive Text Input Using Flexible Touch Sensor

Lishuang Zhan, Tianyang Xiong, Hongwei Zhang, Shihui Guo*, Xiaowei Chen,

Jiangtao Gong, Juncong Lin, Yipeng Qin

Abstract

Text input is a desired feature for AR glasses. While there already exist various input modalities (e.g., voice, mid-air gesture), the diverse demands required by different input scenarios can hardly be met by the small number of fixed input postures offered by existing solutions. In this paper, we present Handwriting Velcro, a novel text input solution for AR glasses based on flexible touch sensors. The distinct advantage of our system is that it can easily stick to different body parts, thus endowing AR glasses with posture-adaptive handwriting input. We explored the design space of on-body device positions and identified the best interaction positions for various user postures. To flatten users' learning curves, we adapt our device to the established writing habits of different users by training a 36-character (i.e., A-Z, 0-9) recognition neural network in a human-in-the-loop manner. Such a personalization attempt ultimately achieves a low error rate of 0.005 on average for users with different writing styles. Subjective feedback shows that our solution has a good performance in system practicability and social acceptance. Empirically, we conducted a heuristic study to explore and identify the best interaction Position-Posture Correlation. Experimental results show that our Handwriting Velcro excels similar work and commercial product in both practicality (12.3 WPM) and user-friendliness in different contexts.

BibTex

闽公网安备 35020302034954号

闽公网安备 35020302034954号